From Deepfakes to Fake Identities: How Threat Actors Are Weaponising AI

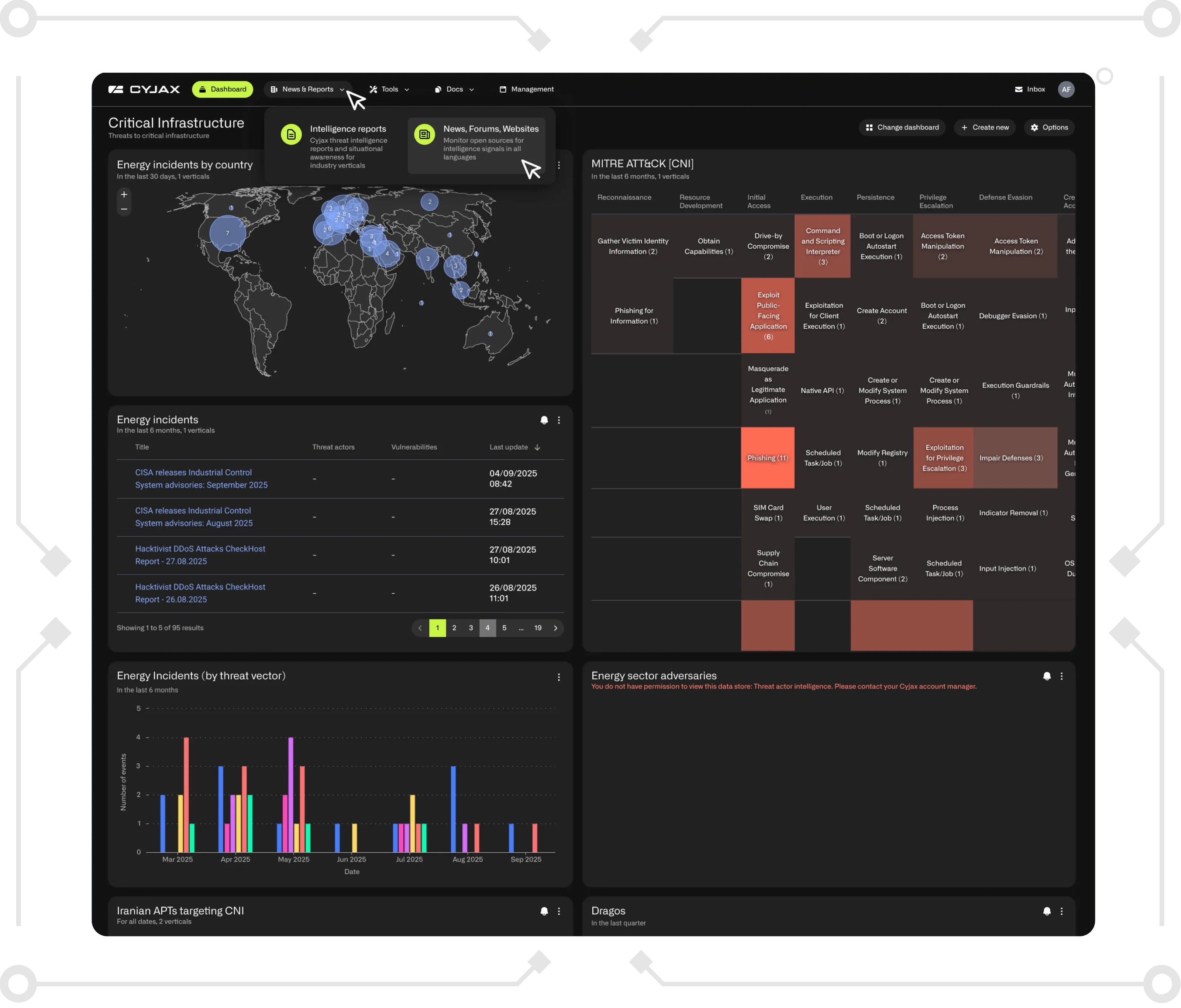

CYJAX has identified a growing trend of AI-based tools being developed and shared across threat actor forums for fraudulent and cybercriminal purposes, highlighting the need for proactive monitoring and defence against evolving AI-driven threats.

CYJAX has observed a rise in the number of alleged AI-based tools being developed across threat actor forums, whether for fraudulent or cyber purposes.

As seen below, an example of such a tool was developed and shared in a Russian-language forum to attract user feedback. In this case, CYJAX’s Russian-language specialists noted that the tool’s output failed to bypass Gemini. The tool, reportedly developed to create a “fake identity”, generates information including social media, job, and financial data. Whilst still in active development, this is a good example of what is currently being organically produced within threat actor circles.

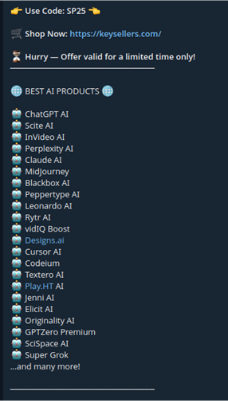

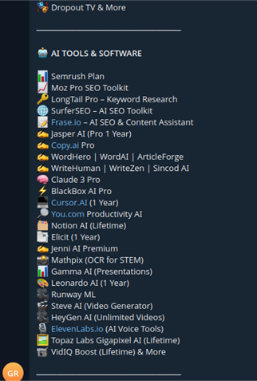

Whilst CYJAX has observed examples of threat actors developing tools, we have also identified “key sellers”. Such key sellers offer keys to most, if not all, commercially available AI tools, often at a reduced cost compared to the RRP.

In terms of fraud methods related to AI, this is not typically offered, as most threat actors with access to commercially available AI tools prefer to keep their tooling useful for both onboarding and wider fraud. Historically, once a method becomes public (through purchase or public sharing), it becomes increasingly easy to detect. For this reason, CYJAX is seeing fewer threat actors offering methods around the use of AI, to protect their own operations.

That said, CYJAX analysts have found references in monitored chats. Below is a list of commercially available tools and their current applications in fraud.

- Deepfake creation (face-swapping)

Fraudsters use this tool to create fake videos or images by swapping faces, often to impersonate celebrities, public figures, or victims. This is typically used in impersonation scams such as fake endorsements or investment schemes.

- AI-powered face-swapping app

Used to create deepfakes of people’s faces on video clips, resulting in onboarding scams, impersonation scams, or fake promotional videos. Threat actors also use it to generate fake social media posts or video testimonials.

- AI for generating synthetic images (faces, landscapes, etc.)

Used to create realistic but fake profile pictures for fraudulent social media accounts, often employed in romance scams or to establish false credibility for investment schemes.

- AI-driven natural language processing for generating text-based content

Threat actors use ChatGPT to automate phishing emails, create fake reviews, write deceptive product descriptions, or manipulate users through social engineering in fake conversations.

- AI-based image generation and art manipulation

Used to generate fake advertisements, deceptive product images, or counterfeit promotional materials for fraudulent products such as fake art sales or scam offerings.

- AI for creating and editing images, video, and audio

Used to generate fake promotional videos or audio clips, such as forged celebrity endorsements or fake interviews, to add credibility to scams.

- AI-based writing, voice synthesis, design, and deepfake creation

Threat actors often hire freelancers using AI tools for services like creating fake reviews, fake voiceovers for scam phone calls, or manipulating media content (audio or video) for fraudulent campaigns.

- AI-based video generation (synthetic avatars that speak in videos)

Used to create fake spokesperson videos promoting fraudulent products, services, or investment opportunities. These synthetic videos appear realistic, making scams seem more credible.

- AI for creating synthetic voices and voiceovers

Used to clone voices of real people (such as friends, celebrities, or public figures) to create fake audio recordings for extortion or fraud (e.g., fake phone calls, blackmail).

- AI to generate realistic images and videos

Used to create fake product images (such as electronics or fashion items) that can be advertised as counterfeit goods on online marketplaces or fraudulent e-commerce sites.

- Natural language processing and text generation

Used to create fake social media posts, scam ads, or automated responses to manipulate users, promote fraudulent services, or gather personal information.

- AI music generation

Used to create custom music tracks or sound effects for scam videos, making them more convincing when promoting fake schemes or fraudulent services.

- AI for image recognition and manipulation

Used to create fake product images that are indistinguishable from legitimate ones, typically in scams involving counterfeit goods sold as authentic items.

- Facial recognition AI

Allows threat actors to track down individuals’ public photos across the web and use their likeness for identity theft, fake profiles, or impersonation scams on social media.

- AI-driven copywriting tool

Used to generate convincing scam emails, sales pages, or fake product descriptions, making fraudulent offers appear legitimate and well-crafted.

- AI-based visual recognition for scanning QR codes

Used to create fake QR codes that lead to phishing websites or malicious apps. When users scan these codes, they are redirected to fraudulent sites that steal personal information or money.

- AI that helps with customer relationship management (CRM) and sales automation

Used to create automated fake sales outreach, offering fake services or discounts on social media to gather sensitive personal information.

- AI tool for transcribing speech to text

Used to transcribe fake voice messages or phone calls from supposed authorities, encouraging users to act on fraudulent offers.

- AI-powered content scheduling and automation for social media

Used to automate fake social media posts and promotional campaigns for fake products, services, or schemes (e.g., fake cryptocurrency or online businesses).

Stay Ahead with CYJAX

As threat actors continue to experiment with AI for malicious purposes, understanding their methods and tools is crucial for maintaining resilience. CYJAX provides trusted, validated, and actionable threat intelligence to help organisations stay ahead of emerging risks.

➡️ Learn more about how CYJAX monitors, analyses, and mitigates threats: www.cyjax.com

Get Started with CYJAX CTI

Empower Your Team. Strengthen Your Defences.CYJAX gives you the intelligence advantage: clear, validated insights that let your team act fast without being buried in noise.