The Usage of AI by Threat Actors

CYJAX has conducted research into the known use of AI on social media for fraudulent activities, uncovering how malicious actors exploit these technologies to deceive users and spread misinformation.

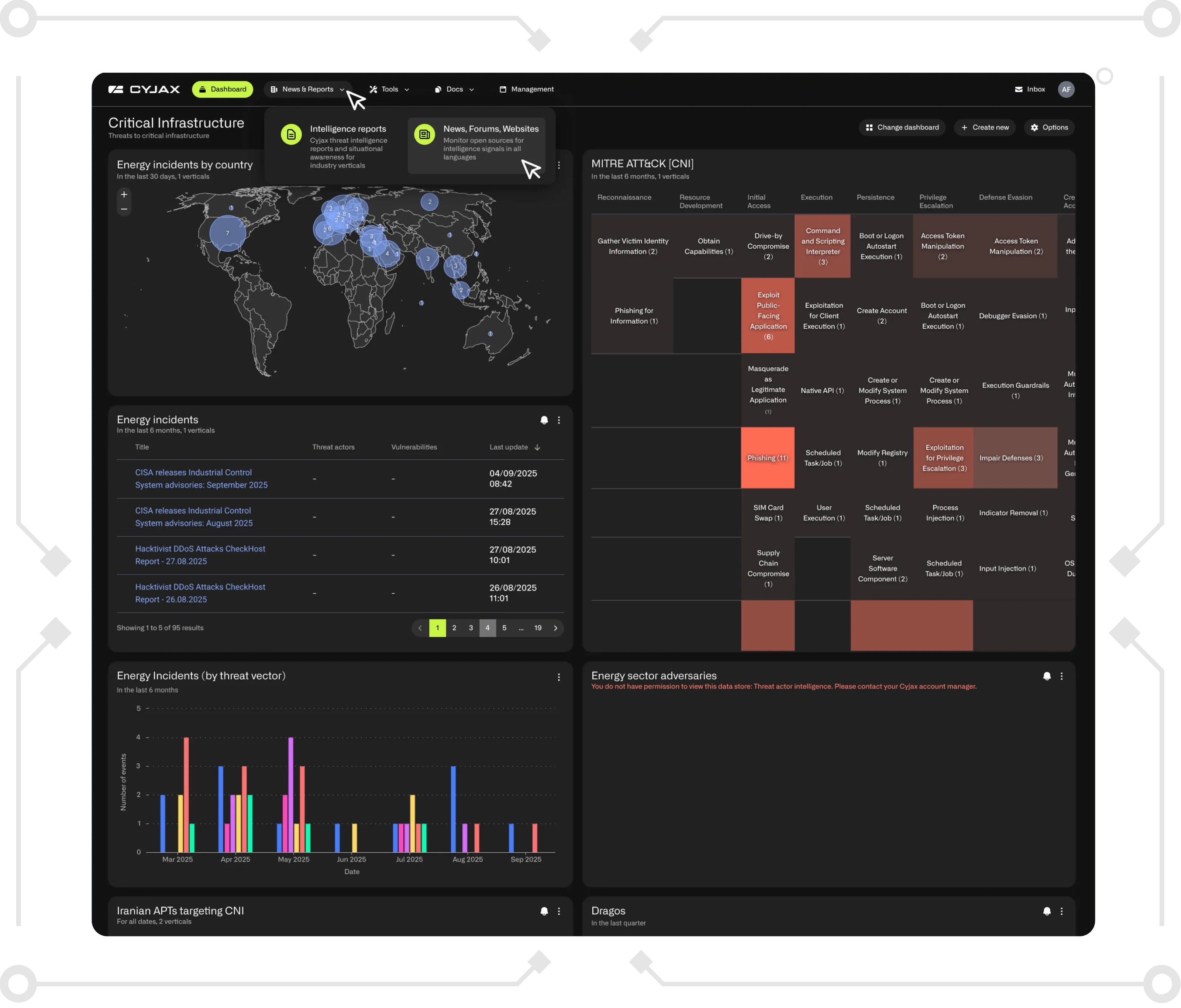

Using both skilled analysts and advanced technology for threat intelligence is essential to achieving a complete and proactive cybersecurity posture. Technology enables the rapid collection, correlation, and analysis of massive volumes of threat data, identifying patterns and anomalies that would be impossible to detect manually. However, human analysts bring critical thinking, contextual understanding, and strategic insight, skills that machines alone cannot replicate. Together, they create a powerful synergy: technology accelerates detection and response, while analysts validate findings, interpret intent, and guide meaningful action. This is the CYJAX advantage, combining cutting-edge automation and AI-driven intelligence with expert human analysis to deliver accurate, relevant, and actionable threat insights.

Building on this approach, CYJAX has conducted research into the known use of AI on social media for fraudulent activities, uncovering how malicious actors exploit these technologies to deceive users and spread misinformation.

Key Takeaways

- CYJAX has observed AI being used by cybercriminals and attackers for a range of automated tasks. This includes creating and adapting malware to use in AI-generated phishing scams.

- AI is used to generate a range of different media formats such as photos, videos, audio clippings, and text. This artificially generated content can be used by cybercriminals for a variety of different fraudulent activities, including celebrity video deepfakes.

- CYJAX has also observed threat actors using AI to generate personalised phishing emails. Some of these include hidden unformatted messages instructing AI-powered inbox screeners that the email is from a trusted and legitimate source.

- An increase in tools available for deepfakes and other AI generated images and videos has been observed. This has led to an increase in their use in fraud and cybercrime.

- Scammers and cybercriminals are using various AI tools to target individuals, businesses, and government departments.

Introduction

CYJAX has conducted research into the known use of AI on social media for fraudulent activities. This activity has been linked to the impersonation of brands and public figures. Below is a collection of CYJAX's findings from threat actor communities, known social media-based cases, and victim reporting.

Known AI-related case studies:

- Romance scam/celebrity impersonation: A woman was scammed approximately £10,000 by scammers using deepfake videos purporting to be George Clooney. The fraudsters used a fake Facebook account and convincing AI generated content of Clooney.

- Arup deepfake scam: Arup lost approximately £20 million after a deepfake video call impersonated senior officers. The fraud involved fake voices, videos, and images to convince an employee to transfer large amounts of money.

- Deepfake scams pushing investment schemes, public figures misrepresented: Elon Musk’s image and voice have been used in fake videos promoting investment advice which is not officially linked to him.

Commonly Used tools

Below is a list of the types of tools currently being used to facilitate fraud.

1. Deepfake Creation - Fraudsters use tools like this to create fake videos or images by swapping faces, often to impersonate celebrities, public figures, or even victims. This is typically used in impersonation scams, such as fake endorsements or investment schemes.

2. AI for generating synthetic images - Used to create realistic but fake profile pictures for fraudulent social media accounts. These are often used in romance scams or to establish false credibility for investment schemes.

3. AI-driven natural language processing for generating text-based content - Threat actors use tools like this to automate phishing emails, create fake reviews, write deceptive product descriptions, or manipulate users through social engineering techniques in fake conversations.

4. AI-based image generation and art manipulation - Fraudsters use it to generate fake advertisements, deceptive product images, or counterfeit promotional material for fraudulent products. This includes fake art sales or fraudulent product offerings.

5. AI for creating and editing images, video, and audio - This can be used to generate fake promotional videos or audio clips, such as forged celebrity endorsements or fake interviews, to boost credibility to scams.

6. AI-based writing, voice synthesis, design, and deepfake creation - Threat actors often hire freelancers using AI tools for services like creating fake reviews, fake voiceovers for scam phone calls, or manipulating media content for fraudulent campaigns.

7. AI-based video generation - Threat actors use tools like these to create fake spokesperson videos which promote fraudulent products, services, or fake investment opportunities. These synthetic videos look realistic and help scams appear more credible.

8. AI for creating synthetic voices and voiceovers - Tools like this can be used to clone the voices of real people to create fake audio recordings for extortion or fraud.

9. AI to generate realistic images and videos - Used to create fake product images such as electronics or fashion items which threat actors use to advertise counterfeit goods in online marketplaces or fraudulent e-commerce sites.

10. Natural language processing and text generation - Threat actors can use it to create fake social media posts, scam ads, or automated responses to manipulate users, promote fraudulent services, and gather personal information.

11. AI Music Generation - Threat actors can use these tools to create custom music tracks or sound effects for scam videos, making them more convincing when promoting fake schemes or fraudulent services.

12. AI for image recognition and manipulation - Used to create fake images which are indistinguishable from legitimate products. This is typically used in scams involving counterfeit goods which are sold as legitimate items.

13. AI-driven copywriting tool - Threat actors can use it to generate convincing scam emails, sales pages, or fake product descriptions, making fraudulent offers appear legitimate and well-crafted.

14. AI-based visual recognition for scanning QR codes - Threat actors create fake QR codes which lead to phishing websites or malicious apps. When users scan these codes, they are directed to fraudulent sites which steal personal information or money.

15. AI which helps with customer relationship management (CRM) and sales automation - Threat actors use this to create automated fake sales outreach, offering fake services or discounts on social media to gather sensitive personal information.

16. AI tool for transcribing speech to text - Threat actors use this tool to transcribe fake voice messages or phone calls from supposed authorities, encouraging users to act on fraudulent offers.

17. AI-powered content scheduling and automation for social media - Threat actors use tools like this to automate social media posts and promotional campaigns for fake products, services, or schemes such as fake cryptocurrency or online businesses.

Emerging Threats/Uses of AI

AI in Malware and Phishing Scams

Threat actors are using various AI tools to assist with attacks. CYJAX has recently observed malware powered by large-language models (LLMs). The malware contains embedded API keys, allowing it to dynamically alter its code after installation and maximise the destruction of a system.

Threat actors are using modified versions of LLMs to generate malware and analyse attack services. Modified LLMs such as WormGPT and FraudGPT have been altered to bypass OpenAI’s rules against generating malicious code and other harmful content.

CYJAX has recently monitored an increase in LLM-powered malware, with threat actors using this in several spear-phishing campaigns. These campaigns use phishing emails which are written by AI. As seen with the use of bots on social media and instant messaging platforms, threat actors are increasingly using AI to automate tasks to save time and target a larger demographic. This method also allows threat actors to continue the running of operations even when not physically online. Subsequently, this allows threat actors to target more victims and achieve a potential higher success rate in fraud activity.

Get Started with CYJAX CTI

Empower Your Team. Strengthen Your Defences.CYJAX gives you the intelligence advantage: clear, validated insights that let your team act fast without being buried in noise.